… What’s an Arthur Barthur?

Recently, I came across a bundle of blueprints for working with Unreal Engine’s native audio, all developed by ArthurBarthur and aptly referred to as ArthursAudioBPs. Effectively, the project is broken up into distinct levels that showcase unique elements in UE4.25’s audio systems. I found the levels that concentrated on visualization to be the most interesting, as they utilized real-time spectrum analysis to control materials, colors, and lighting in a level by analyzing an audio submix or master output on the fly. In an effort to better understand how these systems work and intercommunicate, I took to studying and refactoring a few of Arthur’s audio blueprints. As a quick and clear note before breaking down the refactor, all credit for the original blueprints goes directly to ArthurBarthur.

Reducing Interdependency

When first exploring the levels throughout ArthursAudioBPs, I noticed that most shared a handful of objects. More specifically, each level had at least one “BP_Analysis Beautifier” and “BP_Submix Analyzer”. Upon further inspection, I noticed that the former depends explicitly on the latter being somewhere in the level, which acted as a liaison for passing information on the spectrum analysis for a specific submix. Presumably, this was made so that each level can have multiple Submix Analyzers, each one assigned to a different submix. Then, Beautifiers can be assigned to the handful of Analyzers as needed, without actively running multiple FFTs for the same submix to minimize processing overhead. The setup for this, while understandable, is not immediately intuitive. In an effort to address this without jeopardizing the key reason for separating them in the first place, I redesigned the Beautifier to act as either:

A standalone blueprint that can analyze and clean-up the results of a submix or master mix analysis internally (without the need for a separate analyzer) or…

Bypass internal FFT processing and reference the results of a separate submix analyzer

This change makes it so that, in a new level, you can drag-and-drop a single blueprint and immediately get cleanly processed and smoothed spectrum analysis data. Then, if needed, you can reconfigure it to reference a specific submix in the asset’s info panel (pictured above) or to reference an external submix analyzer, which will override internal processing altogether.

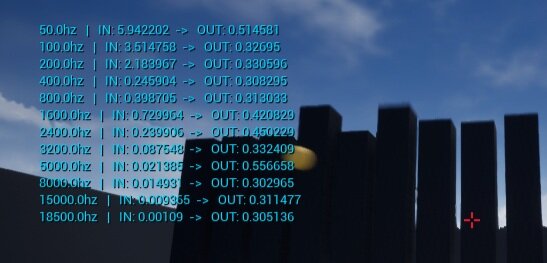

On the left is an array of frequency values that the user assigns on a Submix Analyzer. On the right is a map that adds an additional “compartment” for each frequency to store and pass relevant information with.

Arrays vs Maps

In Arthur’s original blueprints, users input their desired target frequencies in an exposed array on a Submix Analyzer, which then gets passed around throughout the Analyzer, Beautifier, and any future objects in the scene that respond to the results of the spectral analysis. This input array also determines the size of future arrays and each “slot” in the array gets populated with relevant information like the relative volume level on a scale of 0 to 1. However, if you look at an array like this later down the line, and examine a specific slot, there is nothing to indicate the assigned portion of the frequency spectrum that the slot is addressing. While the end result may not necessarily be different, this type of setup can pose many difficulties for debugging and setup purposes. As a real world example, if you want a cube in the scene to expand and contract with the volume level at slot #7, 3200hz, you have to go back-and-forth to reference your original Submix Analyzer’s array later down the line and manually assign that slot for the cube to gather information from. In order to remedy this, I first considered setting up enumerations and structs, however I found these data types are considerably less flexible to work with on the fly. Both are rather strictly defined, and programmatically adding/removing slots isn’t as clean as working with arrays. Instead, I opted for using maps, which allow the user to pair up keys and values in a dictionary that is easily passed around and manipulated. Now, when a user defines a map of frequencies to analyze, future manipulation of data happens specifically in the slot corresponding to that named frequency range. This information can easily be gathered and referenced by new game objects in the scene. See the debug text in the image here for a quick demonstration of how this refactor can help keep tabs on the frequency assignment on a per-slot basis.

Applied Analysis

After the refactoring process, I wanted to apply the Blueprints to something more tangible. With the recent release of Niagara, UE4’s new system dedicated to particle FX, I decided to map realtime frequency analysis to factors like particle spawn rate, color, and placement in space. Here’s a quick demo video!

Afterthoughts

Reflecting on this process over the past few days, the act of refactoring has proven to be an excellent means of education. While I could easily have spent the same amount of time watching YouTube videos and “covering more ground” (or rather, a wider variety of topics), this has proven invaluable for getting to better understand the pros and cons of one seemingly minor choice over another. Working through another individual’s decision making process one step at a time has always helped me in a continued effort to make more efficient, easily understandable, and portable code, so Arthur, if you’re reading this, consider it a sincere thank you for the work and generosity you’ve demonstrated in building and sharing these blueprints.

Check out ArthursAudioBPs here!